Artificial Intelligence has been very popular for the past couple of years. Researchers, businesses and entire countries are developing more and more technology-driven systems. The use of AI seems endless: economics, hospitals, the military, and science all benefit greatly. These developments are already far along and it has become a race for some countries to become the best, be it in innovation or quantity.

Another Cold War might just be closer than we think, but instead of focusing on nuclear weapons, the focus could very well be on Artificial Intelligence instead. What’s even more disturbing is the fact that it could easily turn from cold to an all out war. Russian president Vladimir Putin went as far as saying that

‘The nation that leads in Artificial Intelligence will become the ruler of the world.’

He said this as a warning, because he believes that AI has many risks and can be a threat to the entire world. His warning is applied to many different parts of society: While AI can boost a nation’s economy (from medical welfare to heavy industry), AI can also be used in warfare. The first nation to develop robots that can fight in a war will win, as other nations have no choice but to surrender to not suffer huge losses of human lives. How possible is it for someone to become the leader of AI? Will future wars truly be fought by killer robots? Proponents state that AI systems are better than humans in almost every way, which could potentially lead to quickly starting and ending a war if other nations are still behind. Furthermore, they say that the nation with the leading AI could easily spread disinformation and influence other countries. Opponents point out the many faults that still exist in AI, as well as a potential AI arms race (which in turn leads to new AI being developed as countermeasures). We certainly believe that, without further regulations, the leader in AI will indeed become the ruler of the world.

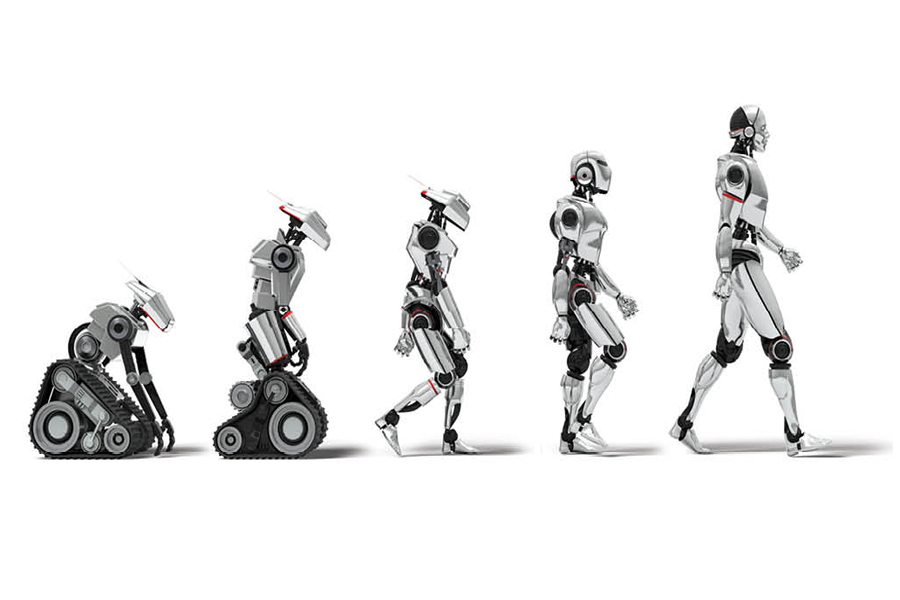

Will intelligent robots be the standard of war?

The most literal way that ‘leader of the world’ can be understood is possessing the whole world. This means having to invade and conquer other countries. Fighting a war with Artificially Intelligent systems, e.g. autonomous weapons, can certainly help achieve this in different ways.

To start off, AI systems improve the chances of a victory by exceeding the capabilities of human soldiers in several ways. In terms of materials, they are able to withstand much more than the soft skin and organs of humans with respect to an armoured robot. In addition, they have more physical power than human soldiers. A human only has a limited amount of strength when they are unarmed, while a robot will still be able to defeat many people without having any weapons. Thereby, autonomous systems can also outperform the human mind in many ways. These systems are often programmed to have an incredible computational speed and power. This leads to faster and more accurate decision making. Also, robots do not suffer from emotions and feelings that may cloud their judgement.

Another valid point is that Artificially Intelligent soldiers could reach certain areas that ordinary humans can’t, because they don’t have the skills to defy this kind of landscape or are simply too exhausted. They can be deployed for these dull, dirty or dangerous missions.

Having robots fight a war will end the war faster (provided that they fight against other humans, not other robots). This will be an advantage to the leader in AI, because others might get hold of the technology and recreate it for their own purposes. If the war is won faster, the chances of this happening will become smaller.

Having human soldiers in an army can lead to casualties. Artificially Intelligent systems will reduce the chances of a ‘human error’. However, while they reduce human error, these systems still haven’t been perfectly accurate themselves. In 2007 an Oerlikon GDF cannon went off uncontrollably during a training exercise of the South African National Defence Force Battle School, killing 7 soldiers and wounding 14 . The SANDF thinks it might be due to a software glitch. Besides software issues, it can also be the case that the program is valid, but its underlying structure is not. These programs are designed by humans, which means there can be human errors in the systems as well. They can contain biases or assumptions that are not anticipated. So the question is: ‘Who makes the fewest mistakes?’. The answer is robots. They are able to learn faster than humans and are at most as vulnerable to bias and assumptions as humans. Thereby, robots have sensors that are better at observing the battlefield than ordinary humans. Even if a robot would make an error in a war, the chances of that working to one’s disadvantage is small. This would only be a problem if it would get in the way of victory.

Not only the offensive side of warfare can be improved by AI, it can also be used to create defensive systems that can surpass that of the other countries. This might seem harmless, but is in fact a very dangerous tool. It can be used to start a nuclear war and then be protected against any counterattacks.

Opponents might say that these tactics will lead to protests and uprisings against this leader in AI. However, the technology is already here to design systems that target specific people that are being rebellious. These can be used to scare or actually eliminate people with face recognition or other forms of identification. Examples of these practices are already presented in several movies and tv series. One is the short movie of Stuart Russell, who is a Computer Science professor and has been working with Artificial Intelligence for over 35 years, in which he warns of the disastrous consequences of autonomous weapons by illustrating what killer drones would look like. Another is an episode from the science fiction Netflix Series ‘Black Mirror’. In the episode ‘Hated in the Nation’ people are tracked and killed by drones after they posted a certain hashtag on social media. Many countries are already aware of these benefits and are in an arms race to make these weapons as fast and accurate as possible.

Victory without PTSD

Before we completely step away from the subject of a military victory, it is also important to note the fact that using Artificial Intelligence as warriors does not only benefit a nation through them exceeding the capabilities of human soldiers, but also from a moral perspective.

It is a well-known fact that soldiers often come back from a war with PTSD: a Post Traumatic Stress Disorder. 88% of people at least somewhat agree that PTSD in veterans is a significant problem. Out of the American veterans who were fighting wars in Iraq and Afghanistan, 13,5% of deployed and undeployed veterans were screened positive for PTSD. To add to that, over 500,000 American troops who were veterans in these wars in the past 13 years have been diagnosed with PTSD. And to make it even worse, the diagnosis of PTSD often goes hand in hand with other psychiatric diagnoses, with the most common ones being major depressive disorder, anxiety and substance abuse.

Through the use of AI systems in wars, the number of people who experience PTSD would be radically reduced, because the AI systems will be the ones fighting, not humans. People would no longer have to kill other human beings, which would often trigger moral conflicts in veterans. In addition, Armin Krishnan writes in his book about killer robots that

‘No military unit can endure more than 10% of soldiers being casualties without seriously compromising the sanity of the survivors – making the use of robots quite necessary in future high-intensity wars.’

However, using these ‘killer robots’ could actually lead to more psychological problems in people as well. Imagine a robot that can kill its targets from a great distance, when the target is in a position or relative safety. This could lead to more fear and it could ‘disengage’ soldiers both emotionally and morally.

Of course, with how far Artificial Intelligence will have come before you can use them as war robots, it is likely that other systems are developed that can prevent these exact situations. An example could be soldiers each getting a protective AI that will move instantly to move the target out of the line of fire. Thus, it is difficult to truly imagine how a war with robots will look.

Disinformation as a weapon

Of course, being the leader of the world does not necessarily mean that one nation has taken over the other countries. The United States of America has been dawned as ‘the leader of the free world’ since the Cold War started, with the American President often being called ‘the most powerful person in the world.’ This is not because the United States of America are actively conquering other nations, but because of the influence they have over others.

One particular aspect of becoming the leader of the world, is to be the distributor of information. Russia is one of the most prominent nations that are actively trying to spread misinformation, leading to confusion on the internet and the manipulation of viewpoints. During the COVID-19 crisis, Russia has conducted a global disinformation campaign aimed at promoting the Russian vaccines and undermining the vaccines that were made in the West. In Afghanistan, which is engulfed in civil war, the spread of online disinformation is very relevant. It is said that in the information society, the spread of disinformation is this century’s version of propaganda. When fighting a war, it is essential to win the hearts and minds of the public, which is where propaganda comes in. If the public is not convinced, the war a country is pursuing is not winnable.

In a research project on computational propaganda, which is the use of algorithms to distribute disinformation, researchers concluded that social media are ‘actively used as a tool for public opinion manipulation’ and that computational propaganda is likely to be one of the most influential tools against democracy. There are four threats in information manipulation that stand out: (1) hyper-personalized content, (2) user profiling, (3) humans becoming out of the loop of AI systems, and (4) deep fakes. All of these can be used to manipulate a person’s viewpoint, which has a huge influence on other nations if done in large enough quantities.

However, countermeasures for the spread of disinformation through Artificial Intelligence are set up worldwide. In 60 countries, there are 304 fact-checking projects active, aiming to eliminate the disinformation campaigns. Trump’s election in the United States increased the interest in these fact-checking projects, with the number of initiatives quadrupling in the past 5 years. Because these Artificially Intelligent systems are based on computer power, it seems impartial against disinformation, as it does not have a bias in its decision making. Several big websites, such as Twitter, Google and Facebook are actively using machine-learning algorithms that find and deactivate profiles that are run by bots, as well as deleting content that is flagged as sensitive.

Unfortunately, there are still many shortcomings to these AI solutions. One of the most significant limitations is the ‘overinclusiveness’ feature of AI: These algorithms are still being developed, which leads to many of them falling victim to false positives and false negatives; they either flag an actual user as being an AI tool, or they flag AI tools as being real users. The false positives are the important mistakes in this case, because they can influence free speech by deleting users’ accounts based on false premises. These mistakes are mostly made because AI can’t accurately read the subtext of individual statements. Thus, something that is implied by the user that other humans would be able to identify easily are often missed. The same mistakes are made where contextual cues are necessary: Sarcasm and irony are often misunderstood.

Furthermore, as mentioned before, Artificial Intelligence is not as impartial as we like to believe. Often, an AI runs the risk of replicating human biases, which leads to people in certain groups to become victims to the AI. These biases can come from both the programmers who design and train the AIs, as well as the data that is used to train the AI on. The scientific community is still discussing whether AI can ever be truly freed from human error, but as of right now, these issues are still in need of solving.

What does that mean for the future?

In the future it is easy to imagine that countries that are rapidly developing Artificially Intelligent systems will be able to keep a tight grip on the rest of the world. It is clear to us that Artificial Intelligence systems require a great responsibility, and without such responsibility there could very well emerge a leader in AI who becomes the leader of the world. Thus, not only those who use these systems have to be careful with them, but also those who develop them. We, as Artificial Intelligence students and the future developers of these systems, must therefore be informed about both the negative and positive effects of AI and the risks that come with it and act accordingly. With Tesla CEO and engineer Elon Musk (together with 116 other technology leaders) sending a petition to the United Nations about more and better regulations regarding Artificial Intelligence weapons in 2017, we can’t help but agree. Not only should these regulations be set for autonomous weapons, but for other fields as well, such as social media and businesses.